When AI ‘Fictions’ Redirect History: Generative Models, Historiography and Misinformation

Introduction

Artificial Intelligence, particularly in the realm of Natural Language Generation (NLG), has experienced exponential advancements with the development of sequence-to-sequence deep learning technologies such as Transformer-based language models (Sajun et al., 2024). Additionally, LLMs enable rapid synthesis and analysis of vast textual corpora.

Despite these technological breakthroughs that enable fluent and coherent text generation, a critical challenge persists, and it is the phenomenon known as “hallucination.” AI hallucination refers to the generation of text that is either nonsensical or unfaithful to the source content, producing information that may be incorrect or unverifiable (Ji et al., p.3-4). This phenomenon is akin to psychological hallucinations, where unreal perceptions feel real similarly, hallucinated AI text appears fluent and natural but lacks grounding in factual or source data.

The Phenomenon of AI Hallucinations

AI hallucinations are defined as outputs produced by generative models that are linguistically plausible yet factually incorrect or entirely fabricated or not eligible for verification (Augenstein et al., 2024). This phenomenon occurs because LLMs generate text by predicting the next word in a sequence based on statistical patterns learned from vast training data rather than drawing from explicit factual verification processes or knowledge-reasoning thinking processes (Özer et al., 2024).

The “black-box nature” of these models, as Augenstein et al. explain, oobscures the origins of hallucinated content, complicating the efforts to detect and correct errors.

Hallucinations are further categorized into intrinsic—contradictory to input data or context—and extrinsic—unverifiable or fictional additions (Zhou et al., 2020; Özer et al., 2024).

In historical research, hallucinations can manifest as invented events, fake citations, misquoted archival material, or fictional interpretations, which AI confidently presents without qualifiers or disclaimers. The danger lies in the output’s convincing form, which can be misleading to scholars and to the public (El Ganadi & Ruozzi, 2024). When studying history, many times “uncertainty” can be a tool, as well as a start on deeper investigation and argumentation. There is less risk in acknowledging uncertainty than in asserting information as fact without sufficient knowledge.

Risks of Hallucinations to Historiographical Integrity

Historiography depends mainly on rigorous methodologies that emphasize source evaluation, corroboration, and contextualization. The incursion of AI hallucinations into historical narratives threatens these foundations (having in mind the large use of AI models in scholarly production in our days) by introducing unverified or false information that may obscure or distort historical truths (AHA, 2025). Unlike deliberate misinformation campaigns driven by ideological motives, AI hallucinations arise without actual intent but bear similar consequences by reshaping public conception and academic understanding.

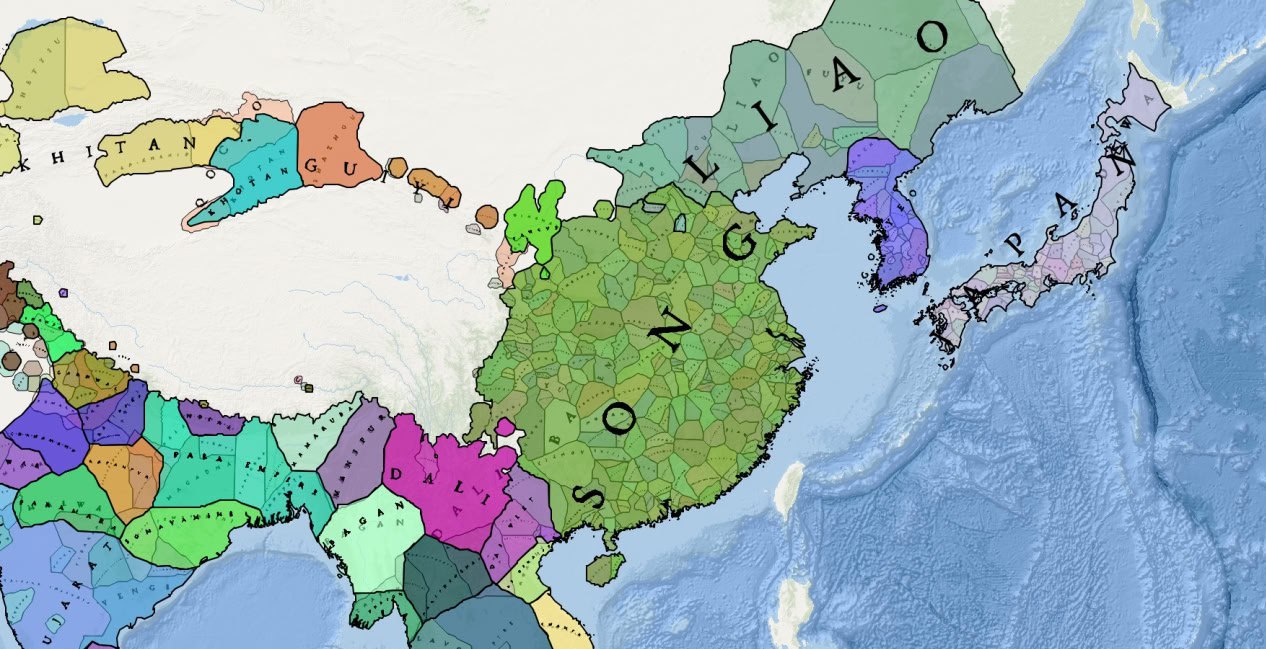

Furthermore, one important risk is the distortion of sensitive historical episodes such as genocides or colonial histories. The generation of fabricated “facts” about sensitive events fosters harmful denialism and revisionism, undermining collective memory and justice efforts (UNESCO, 2024). Moreover, by replicating existing biases encoded in training data,hallucinations reinforce dominant perspectives while marginalizing underrepresented voices, further skewing historiographical balances (El Ganadi & Ruozzi, 2024).

Unlike traditional misinformation, which involves human agency, motivations, and often intentional deception, AI hallucinations are systemic byproducts of model architectures and training processes. Augenstein et al. (2024) highlight that AI systems lack epistemic awareness and cannot self-correct or recognize falsehoods autonomously. This fundamental difference complicates the applicability of conventional misinformation theories, such as motivated reasoning or propaganda models, to AI-generated content.

Further, AI Hallucinations emerge from vulnerabilities at multiple layers, such as biases and gaps in training data, opaque learning processes, and imperfect downstream content filtering (Augenstein et al., 2024). This multi-layered structural complexity means hallucinations are not merely accidental errors but inherent risks requiring novel oversight and mitigation frameworks.

Nowadays, the digital ecosystems, which we live in—social media, news aggregators, and search engines—amplifies the risks of AI hallucinations by facilitating rapid and widespread dissemination. Often, users lack expertise or inclination to critically assess AI-generated historical content, leading to uncritical acceptance of fabricated narratives . Given AI’s increased role in information-seeking behaviors, surveys reveal that a growing proportion of people rely on AI tools for knowledge acquisition. Thus, hallucinations pose threats to public history literacy and democratic discourse (Augenstein et al., 2024).

Role of Historians and Educators

To safeguard historiographical integrity, historians must incorporate digital literacy and AI literacy into their research practices. Recognizing the limitations of AI requires historians to critically evaluate AI-generated content against primary sources and established scholarship, emphasizing uncertainty and methodological rigor (American Historical Association, 2025).

This reassessment extends to historians’ pedagogy, where curricula must prepare students to interrogate AI-generated texts and understand the epistemological challenges posed by AI hallucinations (AHA, 2025).

Furthermore, historians and AI developers should collaborate to enhance model transparency, incorporate mechanisms for factual verification, and improve provenance tracking of AI outputs to reduce hallucination incidence (El Ganadi & Ruozzi, 2024). For example, retrieval augmentation techniques, such as those proposed by Shuster et al. (2021), have shown promise in reducing hallucinations by grounding AI responses in external factual sources.

Metadata standards and factual consistency evaluation methods developed in natural language processing, such as question-answering approaches to verify summary accuracy (Wang, Cho,& Lewis, 2020) and hallucination detection frameworks (Zhou et al., 2020), can be adapted for historical AI applications to flag doubtful outputs.

Mitigation Strategies

1) Developing AI models augmented with retrieval-based fact-checking systems that reference verified historical databases can reduce hallucinated content (Augenstein et al., 2024).

2) Implementing metadata standards that label and contextualize AI-generated historical content helps users identify its nature and reliability (AHA, 2025).

3) Encouraging interdisciplinary collaborations between historians, computer scientists, ethicists, and policymakers facilitates holistic AI governance frameworks emphasizing accountability and ethical use in history research and dissemination (AHA, 2025; UNESCO, 2024).

4) Strengthening public digital literacy initiatives that educate users about AI’s capabilities and limitations empowers citizens to critically navigate AI-produced historical narratives (World Economic Forum, 2025).

Conclusion

Despite the prevalence of hallucinations, generative AI can enhance archival research and multilingual translations, enabling historians to focus on interpretation given human oversight (Liu et al., 2021; Lebret et al., 2016; Res Obscura, 2023).

Understanding hallucinations as a structural characteristic rather than a defect necessitates continuous vigilance and evolving historiographical practices (Özer et al., 2024).

AI hallucinations—fabricated yet plausible outputs arising from model architecture and data vulnerabilities—pose novel risks to historiography and public historical understanding.

Addressing these requires interdisciplinary collaboration, transparency, critical educational practices, and robust ethical frameworks to harness AI’s potential responsibly while safeguarding historical truth and collective memory.