When Machines Learn to Write: Artificial Intelligence and the Limits of Human-Like Creativity

Introduction

Artificial intelligence has woven itself into the fabric of culture. It crafts poems, mimics satire, and sketches novel outlines. Yet, public debate fixates on a simplistic question: can machines be creative?

This misses the deeper issue: can generative systems transcend fluent imitation to embody authored intent? What appears creative is often just pattern completion beneath the surface (Franceschelli, 2024).

From Probability to Prose: What LLMs Do

Large language models (LLMs) predict the next word by extracting statistical patterns from vast datasets. This produces text that sounds right coherent, polished, and often persuasive. But writing is more than fluency; it’s a deliberate act of balancing knowledge, context, and purpose.

LLMs excel at form but struggle with the why behind a text. Studies on culturally specific material, show models can capture rhythm but miss the social critique embedded in the words (Škobo & Šović, 2025a).

For instance, an LLM might emulate a satirical writer’s cadence but fail to convey the cultural weight of their commentary on societal norms, resulting in a hollow echo of wit.

This gap highlights a core limit: LLMs aggregate patterns, not experiences. In applications like reconstructing historical texts, AI can summarize or translate but cannot grasp deeper significance. Human creativity thrives on intent, which machines simulate but do not possess.

Imitation Versus Authorship

Human authorship intertwines expression with responsibility. A paragraph reflects deliberate choices-what to include, omit, or imply. Generative systems produce text without this burden, raising questions in academic and cultural settings: who owns a machine-generated claim?

Emerging consensus calls for transparency: disclose AI’s role, limit it to tasks like summarizing, and document its use for clarity on human versus algorithmic input (Watson, 2025).

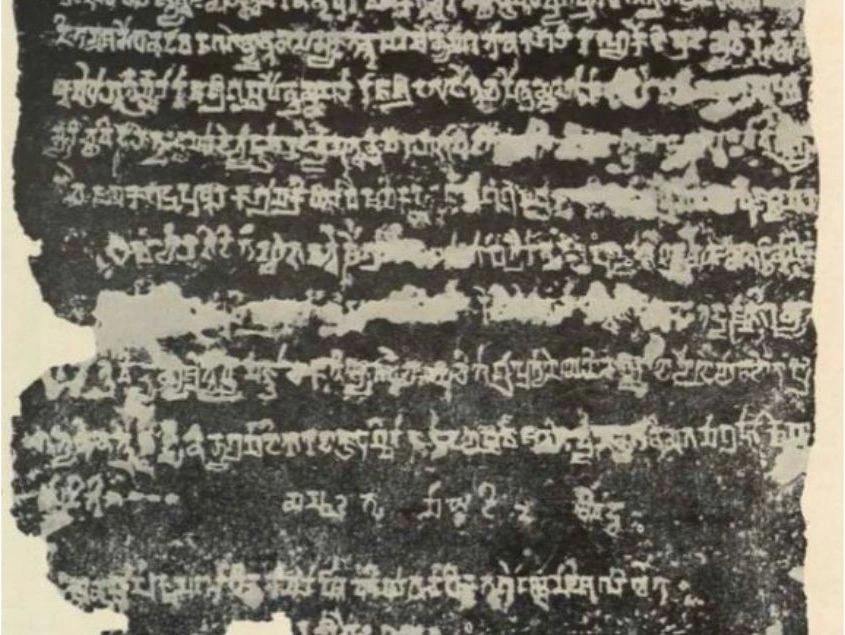

To illustrate, an LLM might transcribe a 19th century manuscript with precision, but only a human can interpret its social or political subtext. AI supports processes-scanning archives, suggesting connections-but the interpretive leap remains human, ensuring narratives retain depth and accountability in educational or cultural contexts.

Humor as a Stress Test

Humor sharply exposes AI’s limits. A joke is a social script, relying on timing, shared history, and cultural boundaries. LLMs, trained on diverse global data, produce witty structures but often miss the tacit knowledge that makes punchlines resonate.

In experiments with Balkan oral storytelling, AI-generated anecdotes were structurally sound but lacked the layered irony tied to local history (Škobo & Šović, 2025a). The result amused superficially but failed to connect with native audiences, showing creativity is not just novelty – it’s relevance, rooted in context machines struggle to inhabit.

In educational settings, this limitation becomes a teaching tool. Comparing AI outputs to human-crafted texts highlights the gap between form and meaning, fostering critical engagement with technology and its role in cultural representation.

Style Without Understanding

Style transfer-mimicking a writer’s voice-is a technical triumph but a conceptual challenge. Prompt engineering can make an LLM echo a Renaissance scholar’s prose, but without grasping the era’s philosophical debates, the output risks becoming a caricature.

This matters in literature, where style is not decoration but an index of judgment. A writer’s irony targets an institution or belief; an LLM mimics its cadence while remaining indifferent to its aim.

In cultural preservation, reconstructing voices demands more than linguistic mimicry. Without human insight, AI risks reducing complex legacies to static patterns, as seen when emulating historical figures in interactive applications.

Creativity Beyond Prediction

Creativity is relevant surprise, deviating from expectation with purpose. LLMs, optimized for fluency, lean toward predictable expressions, smoothing out quirks-hesitations, odd metaphors-that mark human originality.

In reconstructing fragmented texts, AI might weave seamless narratives, but humans embrace ambiguity to probe deeper questions.

AI may fill gaps in medieval chronicles, but scholars’ creativity lies in leaving them open to invite inquiry. This distinction underscores why AI supports but does not supplant human insight.

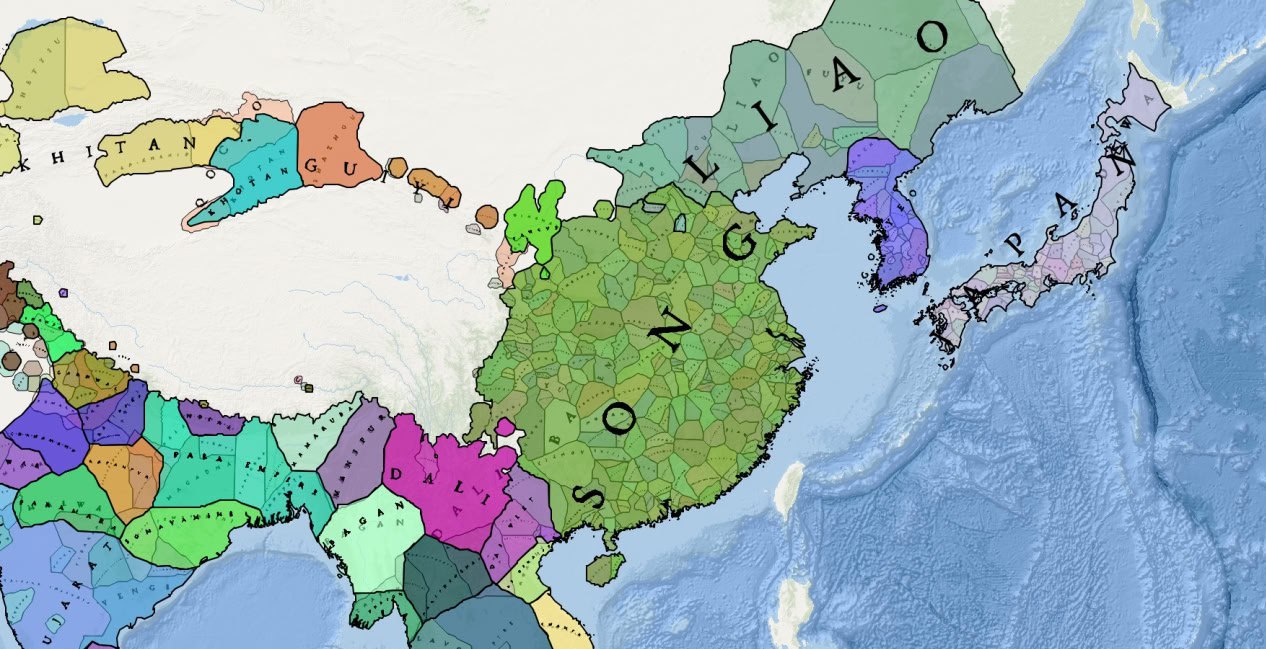

Cultural and Linguistic Context: Why Local Nuance Breaks

Creative language is embedded in cultural memory-idioms, silences, subtexts-that datasets rarely capture fully. In low-resource languages or historical dialects, AI’s generalizations distort meaning. Curated corpora and strict prompts help, but human oversight is essential.

Experiments with culturally anchored satire confirm this: models perform best with strict guardrails, context-rich prompts, and evaluation by native readers, not just automated metrics (Škobo & Šović, 2025a). Without these, AI risks flattening cultural nuances into generic outputs, diminishing the richness of local traditions.

The Mirror Problem

Readers project meaning onto coherent texts, making AI prose seem intentional even when it’s not. This “mirror problem” explains its persuasiveness but risks misleading when AI generates authoritative analysis without understanding.

Disclosure-clarifying what AI did (e.g., summarized sources) keeps human judgment central. For example, in cultural applications, AI might summarize archival texts, but their deeper significance requires human interpretation to avoid misrepresenting historical narratives (Watson, 2025).

Heritage, Memory, and the Cost of Freezing Style

In cultural-educational applications—simulating historical registers or building pedagogical tools—the problem shifts to authenticity. Training AI to “speak like” a past voice risks turning tradition into a catalogue of stylistic features. The language becomes a museum label: accurate but lifeless.

Work on digital replicas shows why curation matters: treating AI as an authority rather than a tool conflates style with expertise, flattening the dialogue between present readers and past texts (Škobo & Šović, 2025b).

For instance, a digital replica of a historical figure might engage audiences but oversimplify their legacy without careful design, reducing a complex life to a set of phrases. Human oversight ensures these tools enrich rather than distort cultural memory.

Practical Guardrails for Writers, Educators, and Editors

A practical framework guides responsible AI use:

- Define Roles: Limit AI to summarizing or rephrasing; keep claims and interpretations human.

- Curate Inputs: Use context-rich prompts and curated datasets to avoid generic outputs.

- Verify Outputs: Treat AI text as drafts needing source checks and reader feedback.

- Teach Limits: Show students the gap between form and meaning, using failed outputs as lessons.

These steps are designed to ensure the authenticity of cultural and educational initiatives.

Conclusion

AI clarifies what’s reproducible (style, fluency) and what’s not (judgment, intent). Writers and educators don’t compete with algorithms but define their role as tools for discovery.

By setting boundaries for AI, we refine our understanding of what makes human expression unique, ensuring technology serves creativity rather than supplanting it.