From Text to Geo Map

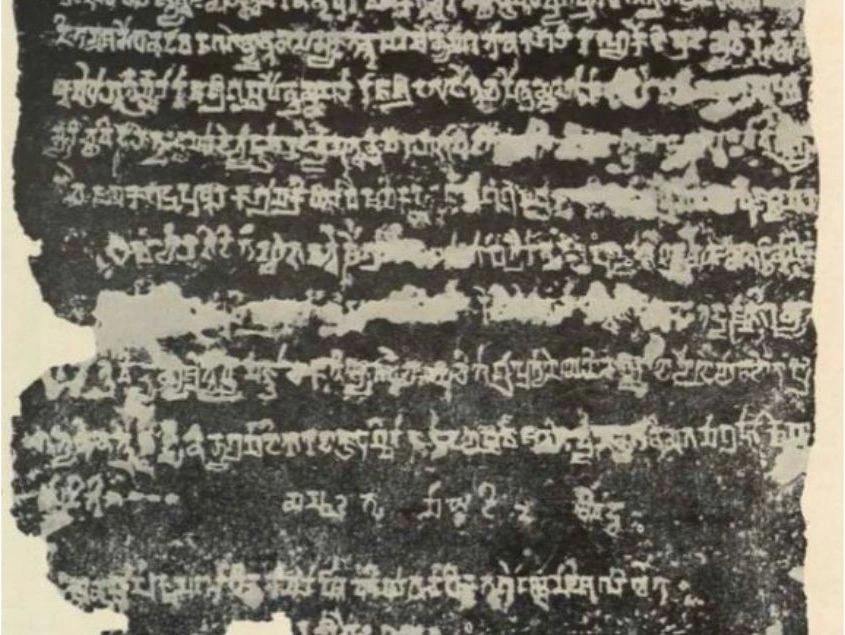

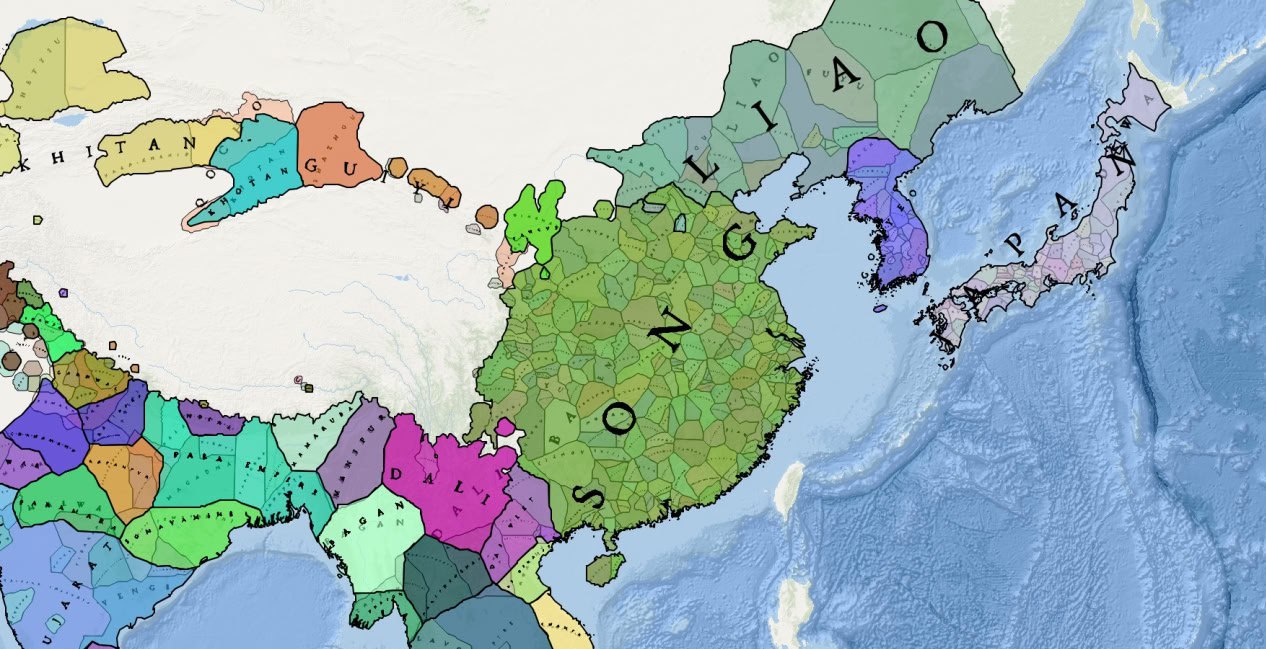

The Historica project is exploring new ways to create a dynamic historical map powered by AI. The idea is to go beyond simply analyzing existing historical geographic data and incorporate methods used in historical research, historical analysis, and digital humanities. Instead, we’re tapping into textual sources to uncover and interpret information about borders, their shifts over time, and other key historical details, all of which are critical for understanding history through the lens of historical geography.

At the heart of our approach is the idea of building ETL (Extract, Transform, Load) pipelines. These pipelines pull important data like places, dates, and events from historical texts and link them to geographic coordinates. Imagine this: the model reads an old account about a kingdom's expanding borders, extracts the key details, and brings the story to life on an interactive historical map, creating the foundation for an AI-generated historical map that updates dynamically.

Building the Foundations for a Rich Historical Database

By pulling together data from various sources, we aim to create a robust historical-geographical database. This would let us visualize changes dynamically on an interactive world map while providing rich historical context for each event. Beyond making history more accessible, this approach opens new doors for analyzing how borders and events evolved over time through tools similar to historical GIS, historical data mapping, and world history maps.

Tackling Challenges Along the Way

Of course, no ambitious project comes without its challenges. Here are some key obstacles we’re addressing:

AI “Hallucinations”. Models occasionally generate misleading data, such as mixing up dates or misidentifying events. For instance, the model might misinterpret a vague date and mistakenly record it as "Year 0 AD" or add an extra digit to a year. While these errors are rare, they can have a significant impact when aiming for historical accuracy. To counter this, we’re building moderation systems that use a cascade of AI models to catch and correct errors, enhancing the quality of machine learning in historical research and reducing the risk of inaccuracies in historical documents extracted from text.

Finding the Right Balance in Prompts. Our experiments revealed that simpler prompts often yield better results. For example, when a model was asked to extract data with just five parameters, it identified more entities (over ten) compared to when more parameters were included (seven or more), which resulted in fewer outputs (under five). Simplifying prompts and breaking down tasks into smaller steps has proven to enhance the model's accuracy when performing tasks such as topic modeling, text mining historical archives, or semantic analysis of historical texts.

Making the Most of Context Windows. While the model supports a massive context window (up to 128K tokens) and large outputs (16K tokens), bigger isn’t always better. When we fed the model very large chunks of text, its accuracy dropped. Splitting these texts into smaller, digestible portions led to more precise and complete results, especially when dealing with big data in historical research, historical time series maps, or archival research that spans thousands of words.

Looking Ahead

These challenges are helping us refine our process and improve how we validate and verify data. We’re already working on adding new quality checks to ensure that only the best data makes it into our historical database. This database is at the core of our interactive timeline of world history, allowing users to explore the past in a more dynamic and engaging way through interactive chronological maps and other digital history tools.

For more insights into our development process and experiments, check out the technology section on our website.